Why iOS though? 10 Reasons why - for me - it beats out Android

Following my longer-than-expected 'how I came to iPhone in the 2020s story' last week, I wanted to list the reasons why its human interface, iOS software and applications also play a part in keeping me from looking too hard at the Android competition...

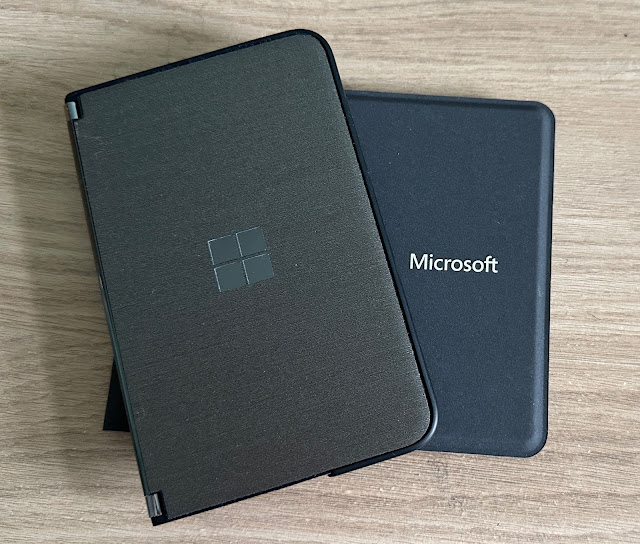

I should preface this list by saying that both iOS and Android operating systems have copied the best features from each other - and from other previous OS, including Windows Phone, Meego, Blackberry OS 10, and Web OS. For 90% of practical use, iOS and Android are now interchangeable and you can certainly set up your home screens and applications to mirror a setup that you might have had on the competing OS.

However that does leave the interesting 10%. So, away from mundanities like launching a web browser, running PIM and social media apps, watching YouTube and Netflix (etc), all of which are nigh identical on each OS, here are some of the reasons why, in 2025, I stay on the iPhone and iOS:

1. Face ID

It's easy to say that Apple's infrared-laser-dot-scanner 3D face authentication is just their quirky take on biometrics. But it's more than that because it's way, way better than either traditional camera-based face unlocking (which fails in poor or irregular light) or ultrasonic, optical, or capacitive fingerprint sensors (which fail, for me, due to poorly defined thumbprints).

In contrast, Face ID works in all light, including pitch dark, works in a split second, is secure enough to enable almost unlimited value financial transactions, and - amazingly - is tied to algorithms that learn your changes in appearance over time (e.g. growing a beard, changing your hair, getting older).

It's such a game changer that when, in the Android world, we got a glimpse of something similar with the Pixel 4 series from Google, that tech instantly got lauded. And yet Google fumbled the implementation, requiring all apps to explicitly change to accommodate the new sensor, while Apple was able to slide in Face ID seamlessly to replace Touch ID (as it sounds, the pre-iPhone X fingerprint sensor) without developers having to lift a finger to accept such authentication. As a result, Apple's system has flourished and Google's died a quick death.

2. Media handling

Now this is a subtlety, but an important one for me. No, I'm not talking about syncing music and movies from iTunes or similar apps on the Mac (though I do in fact sync over my main offline music library), but iOS is much more efficient in the way it handles video files in particular.

For example, I shoot a video on the iPhone (any one, not just a flagship) and want to do a trivial edit, say chopping the beginning and end off. I tap on the Edit icon and then drag in the begin/end markers. Tap on 'Done' and 'Save video' and the video is instantly truncated. On Android, in Google Photos or similar, I drag in end markers in the same way and yet, when finished, the entire video has to be re-rendered to accommodate the new start and finish. Which, on a longer video, take a while. When you're used to instant saving on iOS, to suddenly have to put your life (and phone) on pause while a video re-renders is very annoying.

As with Face ID, media handling is just baked into iOS at a low enough level that users (and their apps) can just enjoy the benefits. Which I do.

3. Bass Octave Shift

As I seem to be just about the only tech person in the world who ever writes about this, I'll spell it out here. All iPhones have bass octave shifting baked into their speaker drivers. By which I mean that sounds below about 100Hz are octave shifted (i.e. their frequencies are doubled, so effectively becoming a second 'harmonic' of themselves) by the OS. In practice this means that bass notes in music that would otherwise be inaudible on tiny phone speakers (because 'physics') are shifted from, say, 60Hz to 120Hz, meaning that they still sound in 'tune' with the rest of the music but they actually be heard.

OK, so you're not hearing the original deep rumbling (perhaps 20Hz) bass that the artist intended (they'd be horrified that you're hearing their music on phone speakers anyway!), but it's a lot better than not hearing any bass at all!

This bass octave shifting applies to all iPhones and makes music and soundtrack audio richer and 'punchier', in my experience.

4. ProRAW

5. Ecosystem

6. Apple Weather

This may seem quirky, given how many weather apps are available for all operating systems. Hundreds. Most of which use the same underlying data. But once upon a time there was Dark Sky, an independent weather app that was extraordinarily accurate and localised, thanks to integration of real time cloud tracking (e.g. "It will start raining in 5 mins and last 7 mins"). Apple then bought it and integrated it into Apple Weather, which inherits its capabilities, and very prettily.

So yes, I can and do use other weather apps from time to time (e.g. BBC Weather, and Google Weather), but Apple Weather's widget has pride of place on my main homescreen, for its sheer real time, localised accuracy.

7. Security

Having lived with every OS over the last 30 years (literally), I have a pretty good understanding of companies keeping users safe and secure, ever more important now that so much of our lives is both online and managed from the phone.And as such, I've been enormously impressed by Apple's speed of response to vulnerabilities discovered in the OS and in the Safari browser. These aren't found often, but when this does happen, within a day or two, the appropriate update is offered to every iPhone in the world. At the same time.

(In contrast, Android phones, even flagships, usually have to wait a month or so before OS fixes roll out, and even then only for specific phones from the last two to five years. The situation's getting better in Android land, but it's still some way behind iOS and iPhones.)

8. More reliable snaps

Away from arty nature shots and ProRAW, above, I've also come to depend on everyday snaps of moving subjects (think pets or humans indoors, in particular) also being... well, more reliable than on other phones I've tested.When Apple's Camera software is running, it's constantly reading the sensor and anticipating your tapping of the shutter button on-screen. What this means in practice is that there's (literally) zero shutter lag. So what you see centred in the viewfinder at a particular moment is exactly what you'll capture. Not what's happening in a tenth of a second's time, or longer as on many other phone systems.

Comments